Moving to Progressive Delivery with Feature Flags

“Move fast and break things.” We've heard this countless times. But the best engineering teams ask a better question:

“How do we move fast without breaking things?”

When you’re in a highly-regulated sector, the stakes are high. You must constantly keep up with customer (and market) demands, but your requirements around compliance and risk-reduction remain the same. If you’re using older techniques like Big Bang deployments, every release comes with a risk of failure. The more you ship, the higher the risk.

Take Crowdstrike’s incident, for example. A single update to their Falcon sensor affected Windows systems globally—resulting in a major outage within critical industries like airlines and government services.

For teams that don’t want to risk it all with every deployment, progressive delivery provides a safer approach. In Crowdstrike’s case, it could’ve limited the scope to a smaller subset of systems if they had tested and gradually scaled rather than impacting all the systems they were connected to.

In this guide, we’ll explain how progressive delivery works and how to do it well using feature flagging and modern observability tools.

What is progressive delivery?

Progressive delivery is a deployment strategy that involves continuously deploying code to production but controlling who sees it and when. It builds upon continuous delivery practices by giving you fine-grained control over feature releases.

Progressive delivery using feature flags

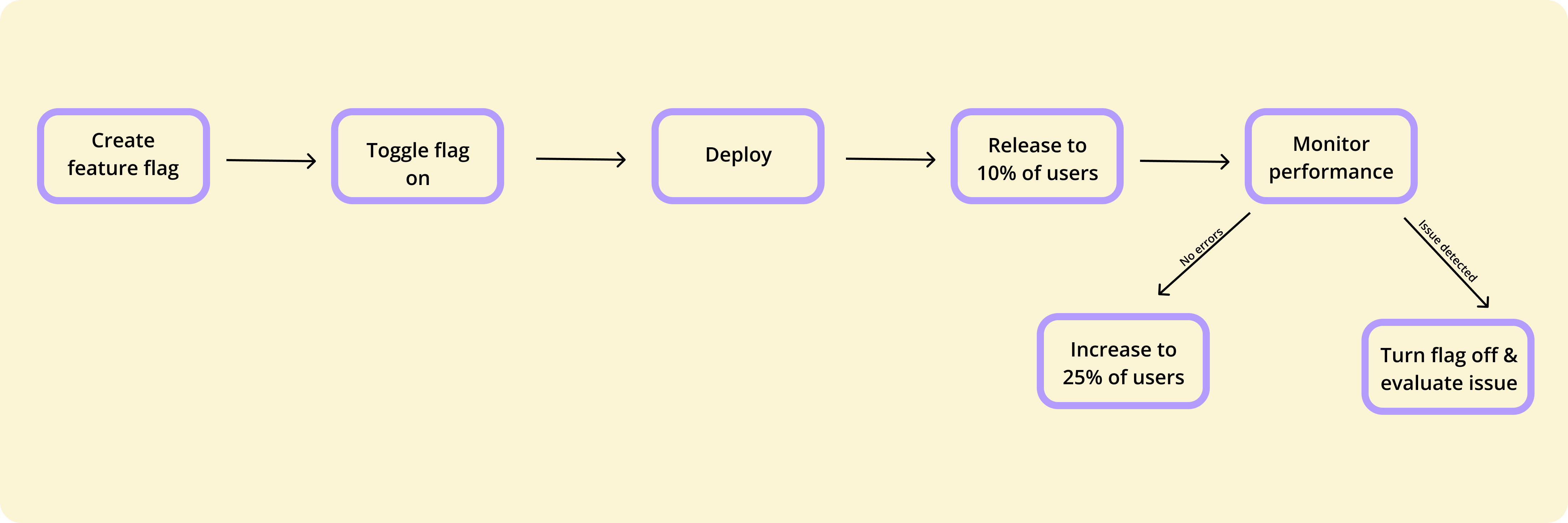

With progressive delivery, you introduce changes to small subsets of users first, monitor the impact, and gradually increase exposure as you gain confidence.

This controlled exposure model creates a safety net for deployments. Instead of shipping and praying it works, you get multiple decision points to evaluate if releasing is the right thing to do.

Progressive Delivery vs Continuous Delivery

Continuous Delivery methods automate build, test, and deployment pipelines—engineering teams ship code frequently and reliably. But once deployed, changes are exposed to all users immediately without a failsafe.

This is where progressive delivery differs. It adds a layer of control where you can still deploy frequently through your CI/CD pipeline, but you decide when and to whom the features become visible.

In Continuous Delivery, you mitigate risk primarily through pre-production testing, trying to catch issues before they hit end users (occuring at the infrastructure level and automating shipping). But with progressive delivery, you distribute that risk through limited exposure and rollback capabilities with tools like feature flags (occuring at the software level).

How engineering teams have “shifted left” to progressive delivery and observability

Fifteen or so years ago, the classic 3-tier application stack (web server, app server, database) ran on relatively static infrastructure. You made changes infrequently, and traditional monitoring tools used simple statistical baselining to detect problems.

But today, the approach looks drastically different. Your infrastructure runs on multi-layered virtualised stacks spanning virtual machines (VMs), Kubernetes, or other serverless platforms.

In many cases, teams are breaking down monoliths and embracing interconnected microservices with numerous third-party integrations throughout the architecture. And now, everyone wants to move faster, so deployments happen continuously rather than on fixed schedules.

This means it doesn’t make sense to introduce observability only after you’ve deployed the code. In fact, the more you shift left, moving testing and quality evaluation earlier in the process, the more problems you can prevent.

For instance, Flagsmith integrates with tools like Grafana to enable that. You can define your observability requirements during design and collect the data you need to make better decisions throughout the development lifecycle.

How feature flagging and observability work together

Vitally, it’s important to understand that observability and progressive delivery work hand in hand. As feature flags enable controlled releases, observability gives you more confidence by showing you how the features behave in production. Together, they create a feedback loop where data drives progressive rollout decisions.

Without proper observability, feature flags could feel risky. You might toggle features on or off but remain blind to their effects. Conversely, without feature flags, observability data might reveal problems, but you'd lack granular control to deal with them quickly.

In short, engineering teams that incorporate both these tools to deliver features progressively do the following:

- Reduce operational risk as fewer users experience problems

- Lower costs because issues are detected earlier

- Pass compliance requirements using detailed audit logs

How do feature flags power progressive delivery?

Progressive delivery requires you to deploy code without immediately exposing new functionality to users. Feature toggles enable this by letting you wrap code to control the release of this functionality.

A major benefit is that it allows developers to merge code into the main branch more frequently. You don’t have to expose incomplete or untested features—you also don’t need to wait until they’re fully ready to deploy them. Additionally—and this is key—these tools also give product teams more control over the release process without needing a developer to help.

So, when you actually release and the data flows through observability tools, you can see how it performs in a real user environment. Interestingly, the relationship between feature flags and observability runs both ways. You see how your feature performs using observability tools and in turn, you can use that data to turn flags on or off automatically (based on certain thresholds).

In this webinar, Kyle Johnson, Co-Founder of Flagsmith, and Andreas Grabner, Global DevRel at Dynatrace, explained how the Flagsmith and Dynatrace integration helps engineering teams:

- Directly correlate user experiences with specific feature configurations. For example, you can see if users with a new payment processing flow enabled are experiencing higher error rates than those with the feature disabled.

- Segment metrics based on feature flag states to compare different variations and see what works best.

- Set up automated responses based on specific thresholds, allowing for direct comparison between different variations.

Plus, your entire team eventually becomes more comfortable shipping incremental changes when they know the release process includes safety mechanisms.

How to build a progressive delivery pipeline with the right tech stack

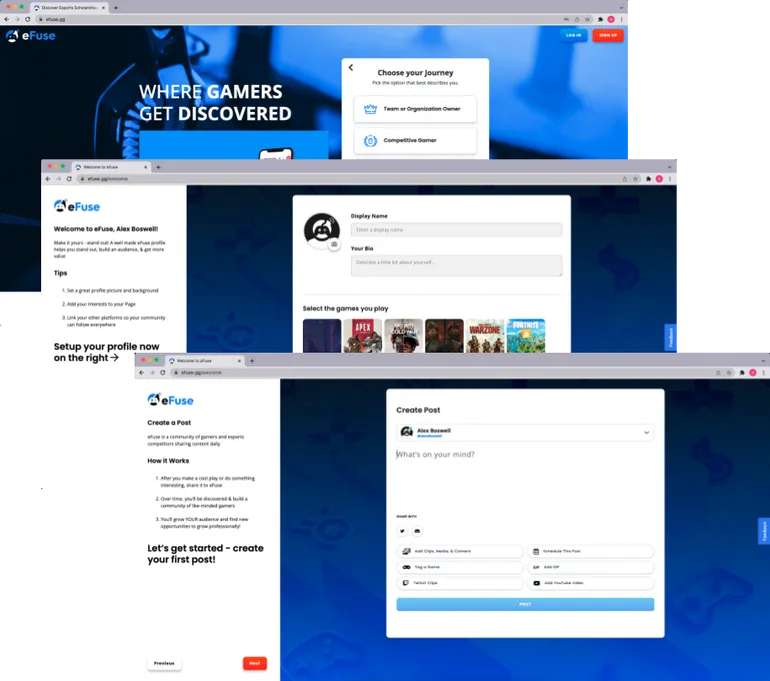

Let’s say you're the lead developer for a mid-sized banking application. Your team has built a redesigned onboarding flow that promises to increase conversion rates and reduce customer support tickets. If you want to make sure it works, try the progressive delivery method. Your rollout could look like this with Flagsmith, Grafana, and Dynatrace:

- Create the flag: Using Flagsmith, set up a flag called ‘newonboardingflow’ to control access to the new onboarding module.

- Conduct a gradual rollout: Incrementally expose the feature to larger user segments. For example, you might start with 10% and increase the percentage to 20%, 30%, and so on.

- Monitor performance: Track performance and user metrics across both versions. With Grafana, you can use annotated queries to see how increasing the percentage affects user behavior. And with Dynatrace, you can monitor issues in real time and mark the feature as “healthy” or “critical” depending on the data.

- Expand or rollback: Based on the observability data, either continue the rollout or revert to the previous version.

🏴 Note: Flagsmith also has a “Feature Health” capability that lets you monitor the feature’s status and performance within the platform.

What are the common pitfalls to avoid when using progressive delivery?

Here are the most common issues teams encounter when implementing progressive delivery—and how to avoid them:

1. They neglect feature flag archiving and cleanup

Feature flags are inherently temporary control points, with a few exceptions like kill switches. But if you leave them in your codebase after they’ve served their purpose, it creates "flag debt" that bloats your codebase and could cause serious unintended app behavior.

You might add a flag today for a major release, but six months later, no one remembers why it exists or whether they should remove it.

Pro tip: Follow feature flag best practices like creating a flag lifecycle policy and naming convention to avoid this issue. Document the purpose of each flag along with on and off dates. If it’s a long-lived flag, explain why that’s the case.

2. They don’t set up the observability tools properly

If you can’t see what’s happening with your flag, it could lead to blind spots in your development and production environment.

For example, a team might roll out a new driving license application portal to 5% of users. But without proper monitoring, they miss that the new portal is increasing onboarding times by 30%.

Pro tip: Think about the three pillars of observability: metrics, logs, and traces. Set up your monitoring in a way that covers the impact and performance.

3. They don’t fix misalignment between developers and product teams

Let’s say your product team wants to roll out a feature to a specific customer segment, and they’ve decided to use an existing flag. The flag may already have a purpose—for example, your developers could've set it up to conduct another test. If you don’t have the context (and documentation) to be sure you can use this flag, you could be causing more problems by switching it back on.

As a result, you flip the switch, and next thing you know, your entire app is experiencing downtime.

Pro tip: Document every flag’s purpose in a living document to share with the product team. Also, make sure you implement role-based access (RBAC) to only allow the right stakeholders to make changes to the flags.

4. They complicate the flag’s usage and don’t account for nested dependencies

You might become so comfortable with feature flags that you start implementing them without realising how they affect the entire live environment.

Never nest flags more than one level deep, and do this sparingly. You want to avoid creating a web of conditionals that complicate observability and performance measurement.

Pro tip: Always check if a flag impacts other components in your environment. But if you want to avoid this issue altogether, maintain solid documentation around feature flags. You can use a feature flagging platform like Flagsmith to track these dependencies.

5. They silo flag data from other platforms in their tech stack

If you use a feature flagging platform, don’t let the data just sit there. Consider integrating it with tools like Grafana or Dynatrace to let them talk to each other and connect the dots between flag changes and impact.

For instance, your team might struggle to tally a sudden increase in drop-off rates with a flag change if they don’t communicate. As a result, you can’t respond faster because you’ll spend hours just teasing out the root cause.

Pro tip: If you don’t have access to specific integrations, use webhooks or APIs to push the flag analytics data into other relevant data tools.

Progressive delivery de-risks deployment and lets you release with confidence

The tension between speed and stability doesn't need to be a zero-sum game. Progressive delivery stops you from moving fast and breaking things. Instead, it lets you move incredibly fast and makes the entire deployment process safer.

If you're still deploying features in an all-or-nothing approach, it’s time to make the switch. With progressive delivery, you can confidently ship code without any lingering anxiety.

You can make better decisions through real-world feedback and squash the dreaded 3 AM alerts.

Frequently asked questions

1. What are the types of progressive delivery methods?

Progressive delivery influences many deployment strategies, such as:

- Canary deployments where you route a small percentage of traffic to a new version of your application while directing the majority to the stable version.

- Blue-green deployments where you maintain two identical production environments: “blue” running the current version and “green” running the new version.

- Ring-based deployments extend the canary concept by defining explicit “rings” of users for progressive exposure.

- A/B testing is when you test multiple implementations to determine which performs better according to specific parameters you’ve set.

2. How does progressive delivery work with containerised environments like Kubernetes?

Kubernetes provides capabilities like rolling updates that gradually replace pods, while extensions such as Flagger and Argo Rollouts add sophisticated canary and blue-green patterns. Feature flags complement Kubernetes by controlling functionality within containers—giving you both infrastructure-level and application-level progressive delivery capabilities.

3. What’s the recommended tech stack for using progressive delivery?

Ideally, you need a combination of feature flagging and observability platforms. For example:

- Feature flagging: Flagsmith provides feature flag management with flexible deployment options, comprehensive targeting, and integrations with observability tools.

- Observability (flag impact): Grafana delivers customisable dashboards with annotations that correlate feature flag changes to performance metrics.

- Observability (issue detection): Dynatrace offers deep application monitoring with AI-powered problem detection that captures flag states and SLO monitoring to validate release quality.

.webp)

.png)

.png)

.png)

.png)

.png)

.png)